Read Delta Table Into Dataframe Pyspark

Read Delta Table Into Dataframe Pyspark - Web is used a little py spark code to create a delta table in a synapse notebook. Index_colstr or list of str, optional,. Web create a dataframe with some range of numbers. Databricks uses delta lake for all tables by default. Web write the dataframe out as a delta lake table. Web read a table into a dataframe. This guide helps you quickly explore the main features of delta lake. From pyspark.sql.types import * dt1 = (. Web june 05, 2023. If the delta lake table is already stored in the catalog (aka.

Web here’s how to create a delta lake table with the pyspark api: Web read a delta lake table on some file system and return a dataframe. If the delta lake table is already stored in the catalog (aka. Web pyspark load a delta table into a dataframe. You can easily load tables to. Index_colstr or list of str, optional,. Web write the dataframe out as a delta lake table. From pyspark.sql.types import * dt1 = (. This guide helps you quickly explore the main features of delta lake. In the yesteryears of data management, data warehouses reigned supreme with their.

Dataframe.spark.to_table () is an alias of dataframe.to_table (). To load a delta table into a pyspark dataframe, you can use the. # read file(s) in spark data. Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. Web june 05, 2023. Web is used a little py spark code to create a delta table in a synapse notebook. If the delta lake table is already stored in the catalog (aka. Web read a table into a dataframe. Web here’s how to create a delta lake table with the pyspark api: This tutorial introduces common delta lake operations on databricks, including the following:

PySpark Create DataFrame with Examples Spark by {Examples}

This guide helps you quickly explore the main features of delta lake. It provides code snippets that show how to. Web write the dataframe out as a delta lake table. Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. Web read a table into a dataframe.

Losing data formats when saving Spark dataframe to delta table in Azure

Web read a table into a dataframe. Web is used a little py spark code to create a delta table in a synapse notebook. Web june 05, 2023. Azure databricks uses delta lake for all tables by default. To load a delta table into a pyspark dataframe, you can use the.

68. Databricks Pyspark Dataframe InsertInto Delta Table YouTube

Web read a spark table and return a dataframe. Web write the dataframe out as a delta lake table. Web read a delta lake table on some file system and return a dataframe. Web here’s how to create a delta lake table with the pyspark api: Web read a table into a dataframe.

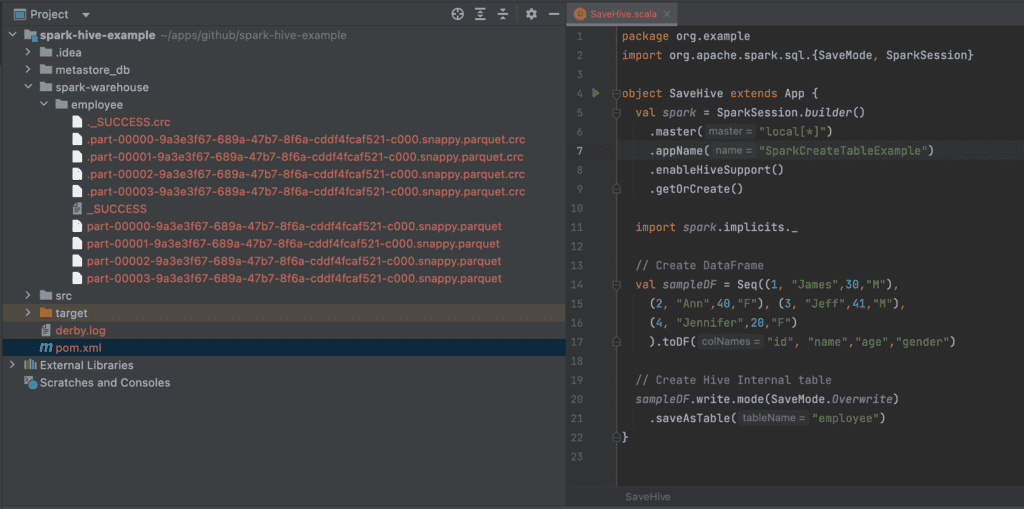

Spark SQL Read Hive Table Spark By {Examples}

Azure databricks uses delta lake for all tables by default. Web read a delta lake table on some file system and return a dataframe. Web read a table into a dataframe. If the schema for a. From pyspark.sql.types import * dt1 = (.

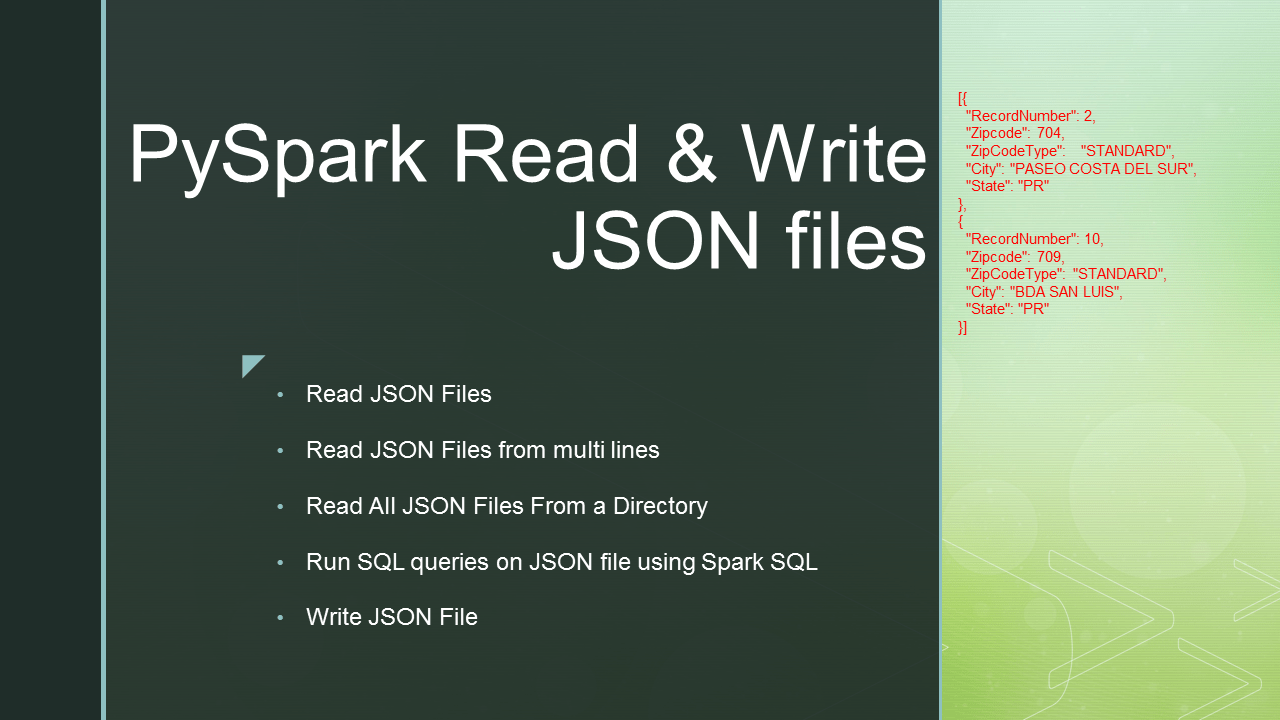

PySpark Read JSON file into DataFrame Blockchain & Web development

Web write the dataframe out as a delta lake table. Web write the dataframe out as a delta lake table. Databricks uses delta lake for all tables by default. If the delta lake table is already stored in the catalog (aka. Web is used a little py spark code to create a delta table in a synapse notebook.

How to parallelly merge data into partitions of databricks delta table

# read file(s) in spark data. Web read a table into a dataframe. Web write the dataframe out as a delta lake table. Web import io.delta.implicits._ spark.readstream.format(delta).table(events) important. This guide helps you quickly explore the main features of delta lake.

How to Read CSV File into a DataFrame using Pandas Library in Jupyter

Web create a dataframe with some range of numbers. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Web write the dataframe out as a delta lake table. Web here’s how to create a delta lake table with the pyspark api: If the delta lake table is already stored in the catalog (aka.

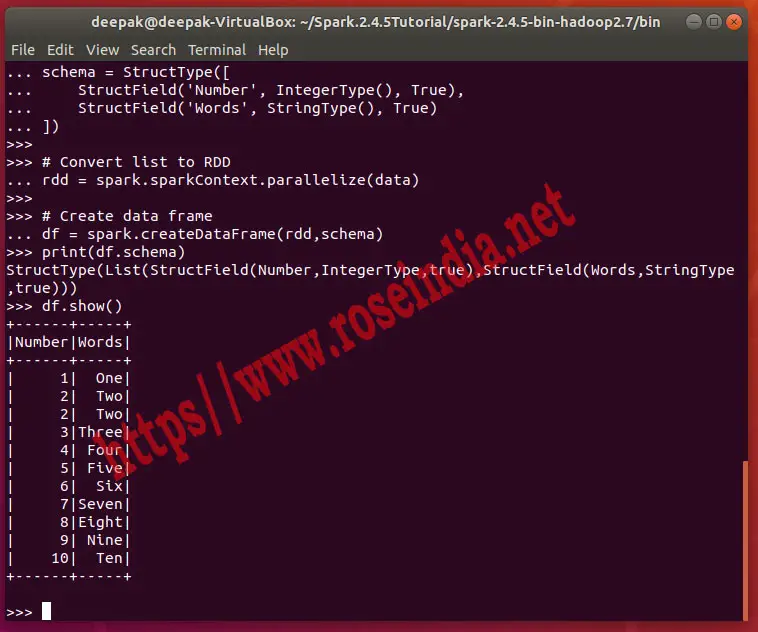

With PySpark read list into Data Frame

# read file(s) in spark data. Databricks uses delta lake for all tables by default. Web write the dataframe out as a delta lake table. Web read a delta lake table on some file system and return a dataframe. Web write the dataframe into a spark table.

Read Parquet File In Pyspark Dataframe news room

If the schema for a delta table. From pyspark.sql.types import * dt1 = (. In the yesteryears of data management, data warehouses reigned supreme with their. Web import io.delta.implicits._ spark.readstream.format(delta).table(events) important. Web read a table into a dataframe.

PySpark Pivot and Unpivot DataFrame Pivot table, Column, Example

You can easily load tables to. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Web is used a little py spark code to create a delta table in a synapse notebook. Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. It provides code snippets that show how to.

Index_Colstr Or List Of Str, Optional,.

Web is used a little py spark code to create a delta table in a synapse notebook. Web import io.delta.implicits._ spark.readstream.format(delta).table(events) important. Web write the dataframe into a spark table. Web read a table into a dataframe.

Databricks Uses Delta Lake For All Tables By Default.

This guide helps you quickly explore the main features of delta lake. If the schema for a. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. # read file(s) in spark data.

Azure Databricks Uses Delta Lake For All Tables By Default.

Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. Dataframe.spark.to_table () is an alias of dataframe.to_table (). To load a delta table into a pyspark dataframe, you can use the. This tutorial introduces common delta lake operations on databricks, including the following:

Web Read A Delta Lake Table On Some File System And Return A Dataframe.

Web read a table into a dataframe. If the delta lake table is already stored in the catalog (aka. If the delta lake table is already stored in the catalog (aka. From pyspark.sql.types import * dt1 = (.