Spark Read Parquet From S3

Spark Read Parquet From S3 - Import dask.dataframe as dd df = dd.read_parquet('s3://bucket/path/to/data. Class and date there are only 7 classes. The example provided here is also available at github repository for reference. Optionalprimitivetype) → dataframe [source] ¶. Read parquet data from aws s3 bucket. Web 2 years, 10 months ago viewed 10k times part of aws collective 3 i have a large dataset in parquet format (~1tb in size) that is partitioned into 2 hierarchies: Web spark = sparksession.builder.master (local).appname (app name).config (spark.some.config.option, true).getorcreate () df = spark.read.parquet (s3://path/to/parquet/file.parquet) the file schema ( s3 )that you are using is not correct. You can do this using the spark.read.parquet () function, like so: Web parquet is a columnar format that is supported by many other data processing systems. Reading parquet files notebook open notebook in new tab copy.

You can check out batch. Web in this tutorial, we will use three such plugins to easily ingest data and push it to our pinot cluster. Read parquet data from aws s3 bucket. Read and write to parquet files the following notebook shows how to read and write data to parquet files. Web how to read parquet data from s3 to spark dataframe python? Web scala notebook example: Optionalprimitivetype) → dataframe [source] ¶. Class and date there are only 7 classes. You'll need to use the s3n schema or s3a (for bigger s3. The example provided here is also available at github repository for reference.

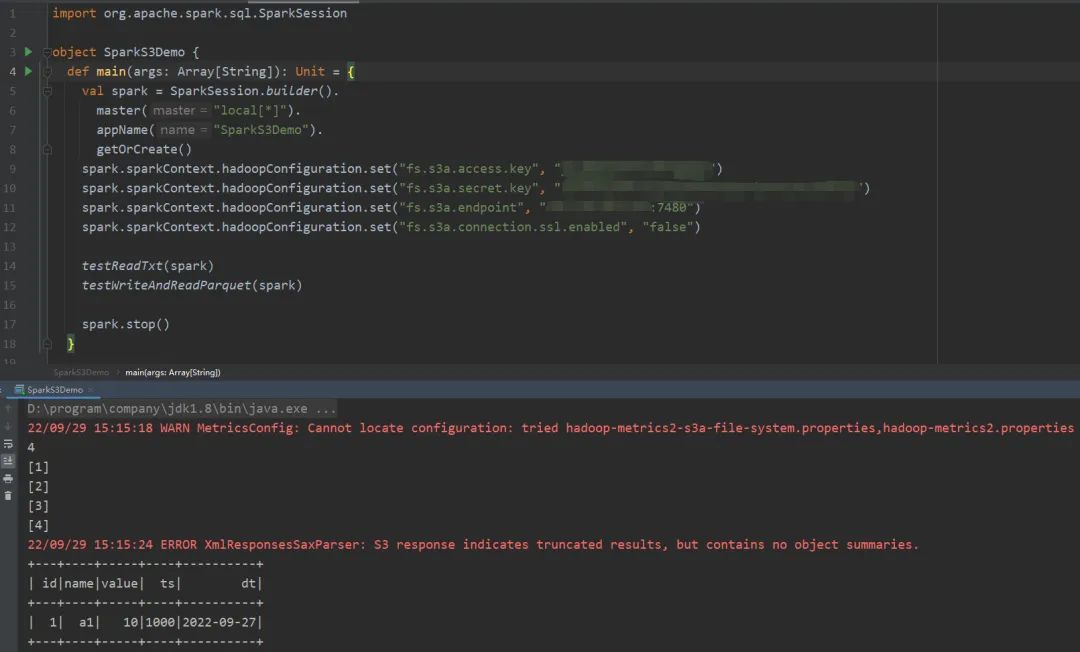

Web spark.read.parquet (s3 bucket url) example: Web spark can read and write data in object stores through filesystem connectors implemented in hadoop or provided by the infrastructure suppliers themselves. Import dask.dataframe as dd df = dd.read_parquet('s3://bucket/path/to/data. We are going to check use for spark table metadata so that we are going to use the glue data catalog table along with emr. Reading parquet files notebook open notebook in new tab copy. Read parquet data from aws s3 bucket. Web january 29, 2023 spread the love in this spark sparkcontext.textfile () and sparkcontext.wholetextfiles () methods to use to read test file from amazon aws s3 into rdd and spark.read.text () and spark.read.textfile () methods to read from amazon aws s3. When reading parquet files, all columns are automatically converted to be nullable for. When reading parquet files, all columns are automatically converted to be nullable for. You can do this using the spark.read.parquet () function, like so:

Spark Read Files from HDFS (TXT, CSV, AVRO, PARQUET, JSON) bigdata

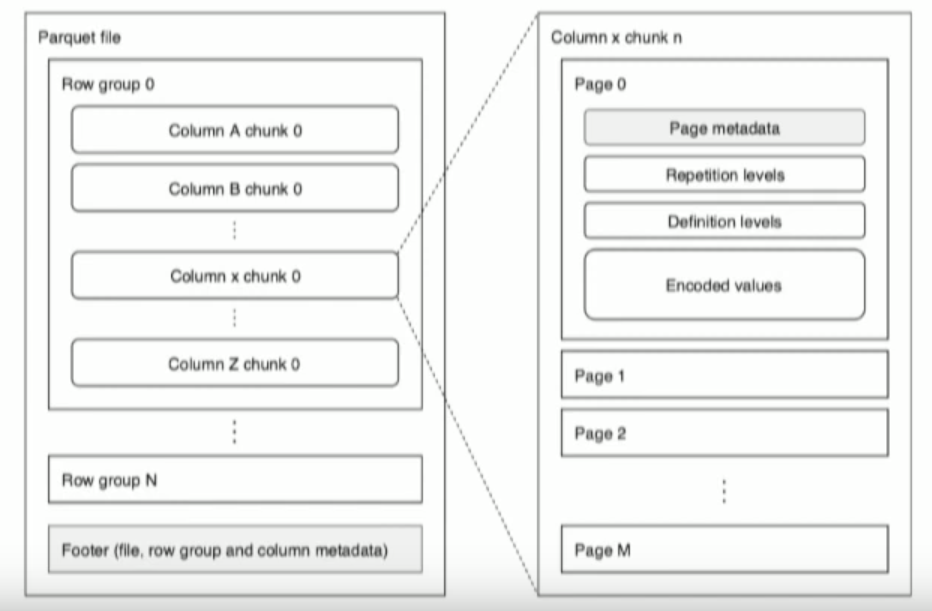

Web now, let’s read the parquet data from s3. Web parquet is a columnar format that is supported by many other data processing systems. Class and date there are only 7 classes. When reading parquet files, all columns are automatically converted to be nullable for. How to generate parquet file using pure java (including date & decimal types) and upload.

Spark Parquet Syntax Examples to Implement Spark Parquet

Import dask.dataframe as dd df = dd.read_parquet('s3://bucket/path/to/data. Web now, let’s read the parquet data from s3. Optionalprimitivetype) → dataframe [source] ¶. These connectors make the object stores look. Reading parquet files notebook open notebook in new tab copy.

PySpark read parquet Learn the use of READ PARQUET in PySpark

Optionalprimitivetype) → dataframe [source] ¶. Web spark can read and write data in object stores through filesystem connectors implemented in hadoop or provided by the infrastructure suppliers themselves. Web how to read parquet data from s3 to spark dataframe python? You can check out batch. Web spark.read.parquet (s3 bucket url) example:

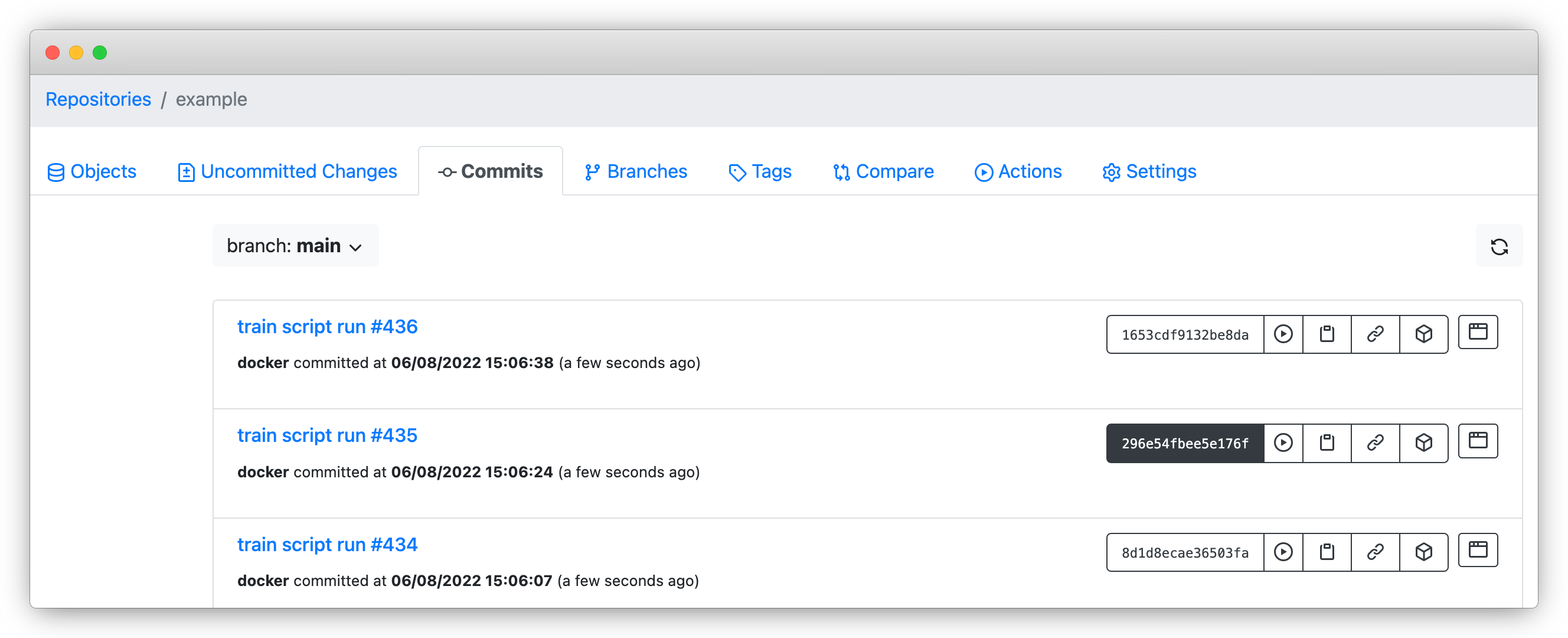

Reproducibility lakeFS

Web probably the easiest way to read parquet data on the cloud into dataframes is to use dask.dataframe in this way: Web spark = sparksession.builder.master (local).appname (app name).config (spark.some.config.option, true).getorcreate () df = spark.read.parquet (s3://path/to/parquet/file.parquet) the file schema ( s3 )that you are using is not correct. Optionalprimitivetype) → dataframe [source] ¶. Trying to read and write parquet files from.

Spark Read and Write Apache Parquet Spark By {Examples}

Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. You can do this using the spark.read.parquet () function, like so: Trying to read and write parquet files from s3 with local spark… Web probably the easiest way to read parquet data on the cloud into dataframes is to.

apache spark Unable to infer schema for Parquet. It must be specified

Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. You can check out batch. The example provided here is also available at github repository for reference. Optionalprimitivetype) → dataframe [source] ¶. These connectors make the object stores look.

Write & Read CSV file from S3 into DataFrame Spark by {Examples}

Reading parquet files notebook open notebook in new tab copy. We are going to check use for spark table metadata so that we are going to use the glue data catalog table along with emr. Loads parquet files, returning the result as a dataframe. Web now, let’s read the parquet data from s3. Spark sql provides support for both reading.

Spark Parquet File. In this article, we will discuss the… by Tharun

Web in this tutorial, we will use three such plugins to easily ingest data and push it to our pinot cluster. Trying to read and write parquet files from s3 with local spark… Class and date there are only 7 classes. Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the.

The Bleeding Edge Spark, Parquet and S3 AppsFlyer

Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Web how to read parquet data from s3 to spark dataframe python? These connectors make the object stores look. Spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data..

Spark 读写 Ceph S3入门学习总结 墨天轮

Reading parquet files notebook open notebook in new tab copy. How to generate parquet file using pure java (including date & decimal types) and upload to s3 [windows] (no hdfs) 4. Dataframe = spark.read.parquet('s3a://your_bucket_name/your_file.parquet') replace 's3a://your_bucket_name/your_file.parquet' with the actual path to your parquet file in s3. Read parquet data from aws s3 bucket. Web spark can read and write data.

Web Spark Can Read And Write Data In Object Stores Through Filesystem Connectors Implemented In Hadoop Or Provided By The Infrastructure Suppliers Themselves.

Import dask.dataframe as dd df = dd.read_parquet('s3://bucket/path/to/data. Web january 24, 2023 spread the love example of spark read & write parquet file in this tutorial, we will learn what is apache parquet?, it’s advantages and how to read from and write spark dataframe to parquet file format using scala example. Read and write to parquet files the following notebook shows how to read and write data to parquet files. Web spark = sparksession.builder.master (local).appname (app name).config (spark.some.config.option, true).getorcreate () df = spark.read.parquet (s3://path/to/parquet/file.parquet) the file schema ( s3 )that you are using is not correct.

Web Parquet Is A Columnar Format That Is Supported By Many Other Data Processing Systems.

The example provided here is also available at github repository for reference. We are going to check use for spark table metadata so that we are going to use the glue data catalog table along with emr. Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Dataframe = spark.read.parquet('s3a://your_bucket_name/your_file.parquet') replace 's3a://your_bucket_name/your_file.parquet' with the actual path to your parquet file in s3.

You Can Check Out Batch.

Web 2 years, 10 months ago viewed 10k times part of aws collective 3 i have a large dataset in parquet format (~1tb in size) that is partitioned into 2 hierarchies: Web probably the easiest way to read parquet data on the cloud into dataframes is to use dask.dataframe in this way: Web scala notebook example: When reading parquet files, all columns are automatically converted to be nullable for.

Spark Sql Provides Support For Both Reading And Writing Parquet Files That Automatically Preserves The Schema Of The Original Data.

Web january 29, 2023 spread the love in this spark sparkcontext.textfile () and sparkcontext.wholetextfiles () methods to use to read test file from amazon aws s3 into rdd and spark.read.text () and spark.read.textfile () methods to read from amazon aws s3. Web in this tutorial, we will use three such plugins to easily ingest data and push it to our pinot cluster. Class and date there are only 7 classes. You can do this using the spark.read.parquet () function, like so: